Computers in photography are not a new thing by any stretch of the imagination. Every camera of the digital age has required processing power to create the image. Even before the dawn of digital, processors were used in film cameras. They controlled things such as auto exposure modes, autofocus and flash output.

These days, computers in our cameras are extremely powerful. They can analyse the type of scene in front of our lens, the speed and direction of a tracked subject and even lock onto a subject’s eye. They are quite incredible. However, as good as our processors are, they are simply aiding our photography.

What is Computational Photography?

There are several definitions of computational photography, but to put it simply, it is when a camera uses processing power to overcome the limitations of its hardware. Most commonly, that hardware is either the sensor or the lens.

The potential for computational photography has been known about for some time. However, it’s only in recent years that processing power has become powerful enough to be able to achieve it.

We have been using our laptop and desktop processors for years to achieve computational photography in post-production.

Classic examples of this are HDR, high dynamic range photography, and noise reduction. We wrote an entire article on how shooting multiple images can drastically reduce noise in your shots. The difference today is that our cameras can do this in real time, without the need for us to return to our computers, upload the shots, and process them in an editing app.

There is one genre that is driving computational photography more than any other and that is smart phones. Let’s look at where we are today.

Computational Photography In 2019

In September 2019, Apple announced the iPhone 11 Pro. The Pro moniker inferred that their new smart phone was a professional photographic tool. This, as with most Apple products, was met with equal measures of derision and intrigue, especially from the photographic world.

As is often the case with Apple products, what they offered to photographers in the iPhone 11 Pro was neither revolutionary or new. It was, however, given the Apple treatment. That is to say, it was made both very usable and of high quality. The driving force that makes the iPhone 11 Pro so intriguing is its current and future potential for computational photography.

Smartphones cameras have been improving in an almost exponential way. The yearly releases by Apple, Samsung, Google, Huawei et al have added some incredible features.

Their camera’s however, have been and will for the foreseeable future, been confined by the phone’s physical dimensions. That means, very small sensors, very small lenses and all the technical issues that go with them.

Smartphone photographers do not want to give up the ultra slim, compact designs of their devices, but they do want to be able to achieve the look of larger cameras. This is a major factor driving the development of computational photography now.

HDR, Bokeh and Stabilisation.

These are the three staples of computational photography as of today. They have been recently joined by high key black and white and night modes. The latter demonstrate how the power of processors is becoming more and more important in photography. But how do they work?

As photographers we are generally used to the one shot approach. We press the shutter, take one shot then press the shutter again. Even the very fastest continuous modes work in a similar way. They simply continue to take single shots until we release the shutter button.

In computational photography, when we press the shutter the camera will take multiple images virtually simultaneously. It will then process those images in real time into a single shot. HDR is the simplest form of this and has been around for a while. The camera takes a 5-6 shot bracket and merges them immediately. This is a relatively simple process that does not require huge amounts of processing power.

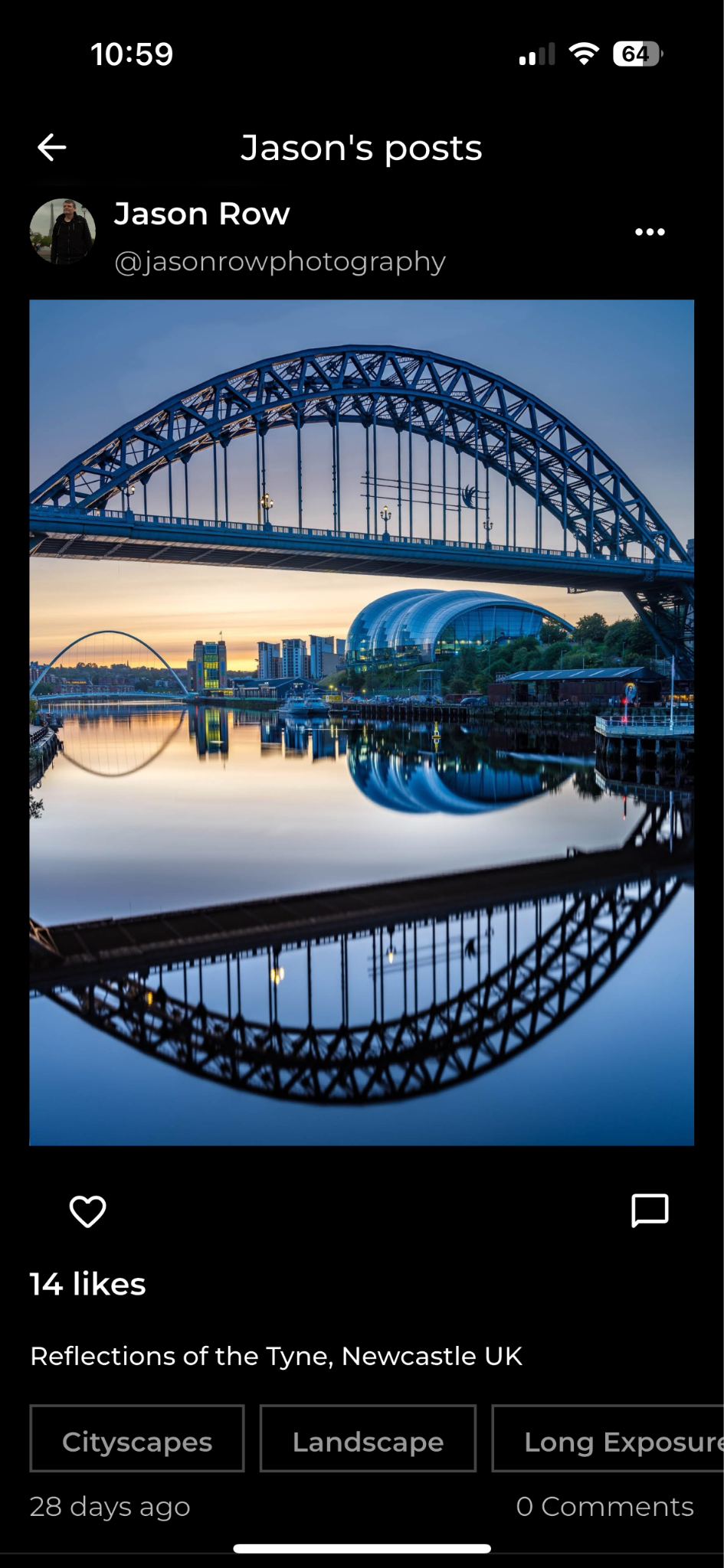

Step up to Bokeh however and we can see how powerful modern smartphone are. Bokeh in physics based photography requires large sensors and wide aperture, fast lenses of at least a moderate focal length. Clearly something that’s impossible in a phone.

To counter this the smartphone takes multiple images each concentrating on a specific technical detail. For example it might take shots to control exposure, focus, tone, highlights, shadows and face recognition. It will then merge them, analysing all the data within each shot and attempt to mask the subject from the background. It will then add a blur to that background to simulate Bokeh. All of this is done virtually in real time.

Night modes and high key filters use similar processor intensive techniques. And these are really only just the start.

The Future Of Computational Photography

The DSLR is dead, is the cry from many photography websites these days. And to be honest, they are almost certainly correct. However, not for the reasons you might expect. Size, video capabilities and many other reasons are touted as to why companies are shifting to mirrorless but this is not the full story.

The reason that the likes of Nikon, Canon, Sony and Panasonic are throwing themselves into mirrorless is because of their potential to use computational photography.

The primary handicap that holds back the DSLR is the mirror. In mirrorless cameras, there is no obstruction to the sensor. That’s why it can live feed an image directly from the sensor to the LCD or viewfinder. That, in turn means it can be analysing a scene live, well before you press the shutter and this makes computational photography a real possibility in mirrorless cameras.

So what is holding them back? The only reason we do not have a lot of computational photography in mirrorless at the moment is the same reason we do have it in smartphones, processing power. The amount of data being pulled from compact system sensors is much much larger than on smartphones and these cameras only have the processing power for limited techniques.

That is changing rapidly though and if you want to see how, just look back at video capabilities over the last few years. A while ago, the standard video format for stills cameras was 1080p at 24fps. Now most new camera’s shoot 4k at 60fps. That’s a quantum leap in processing power and in just a few short years. Computational photography for mirrorless cameras is knocking at the door and that door will be open very soon.

It’s Cheating Isn’t It?

There is going to be a rich vein of purists that will not be happy about computational photography. It’s cheating they will cry, it’s not pure photography they might say. But of course the same type of people said the same things when photography went from glass to celluloid, from black and white to color and from film to digital. The fact is, that photography is an ever evolving technology and always will be. The best photographers have always been the ones that respect and understand the past but are also happy to embrace the future.

3 Comments

Great article Jason! Back in 2008, I worked for a year at a camera store selling photography equipment. In the backroom, as a joke, someone made and hung up a poster that showed a guy holding a Nikon camera. The Tagline said, “The NEW Nikon D9000 Pro! You can take Professional Pictures Immediately!” The insinuation being that the camera came with a pro photographer attached to it. At the time, it was really funny. Little did me know what the future held, including the soon to be announced demise of that photography store chain.

Thanks Kent. I think we are probably not far off the point where cameras will be able to “advise” on basic composition. Probably via data connections to neural networks. Things like Siri and Alexa do this already, it’s only a matter of time before it’s applied to photography

Nit-picking, but the phone’s blurring isn’t simulating bokeh. It is mimicking out of focus areas. Bokeh is the quality of the out of focus area. Obviously that blurring in the phone’s computation has a quality. But that quality is like choosing guassian, box or median blur in Photoshop.

Bokeh is a function of the design of the lens. Some lenses have a smooth quality to the out of focus area. Some have a swirly out of focus area etc. Smooth and swirly would be bokeh (discriptions of the quality of that blur).