About a decade ago, people with still cameras would not have dreamed of making videos with their camera. Times have changed and in one of my recent articles, I wrote a basic guide on introduction to the art of videography.

Video and stills sit side by side in the capabilities of our cameras. They share many similarities but there are some fundamental differences as well. It’s important to know and understand these differences as they can significantly effect the quality of your video footage.

Today we are going to look at two areas where video and still captures take a different approach – technical and creative realm.

The Technical Differences – Shutter Speed

If there is one key technical difference between video and stills, it’s shutter speed. This confuses many photographers because we are so aware of it and what it does. In photography, a high shutter speed is used to freeze motion. A slow shutter speed blurs motion.

In video, however, we nearly always want to have blur in each individual frame. It’s this blur that conveys the sense of motion. If we shot video with a high shutter speed, each individual frame would be sharp. Even the moving elements within each frame would be sharp. If we would play back that footage, it would look very ”staccato”. If you want to see this look, check out the opening twenty minutes of Saving Private Ryan. The footage was shot at a high shutter speed in order to make the action look very uncomfortable for the viewer.

Our goal in making smooth footage is to have blur in the motion elements of each frame. To do this, as we mentioned in the previous article, we should choose a shutter speed that is twice our frame rate. For instance, if our frame rate per second is 30, we should shoot with 1/60s shutter speed.

The Technical Differences – Frame Rates/Bit Rates/Codecs

Frame rate is the number of frames we shoot per second. Traditional movies were shot at 24 frames per second and to this day that frame rate is used in the “cinematic look”.

The frame rates of 25 and 30fps are throwbacks to the days of cathode ray television. Although pretty much any device can show such frame rates, it’s good practice not to mix different frame rates on the same editing timeline.

There are also 50 and 60fps. These give a very smooth look, useful for fast moving action. However, if you use a timeline frame rate that is half the original clip, you can get some very nice looking slow motion footage. Frame rates above 60fps are generally used for ultra slow motion. Many cameras now have the ability to shoot 120fps or even 240fps, but at reduced resolution.

Bit rates may sound slightly alien to photographers. This is the amount of information recorded in a video file. A typical 4K camera may record at 200Mbps. The higher the bit rate, the better the image quality. A video clip that has lots of motion will require a higher bit rate than the ones with little motion. This is because there is much more information in the clip with motion.

Codecs are not dissimilar to JPEG compression. As photographers we know that JPEGs can be saved at different compression rates. A lower number gives higher compression and worse image quality.

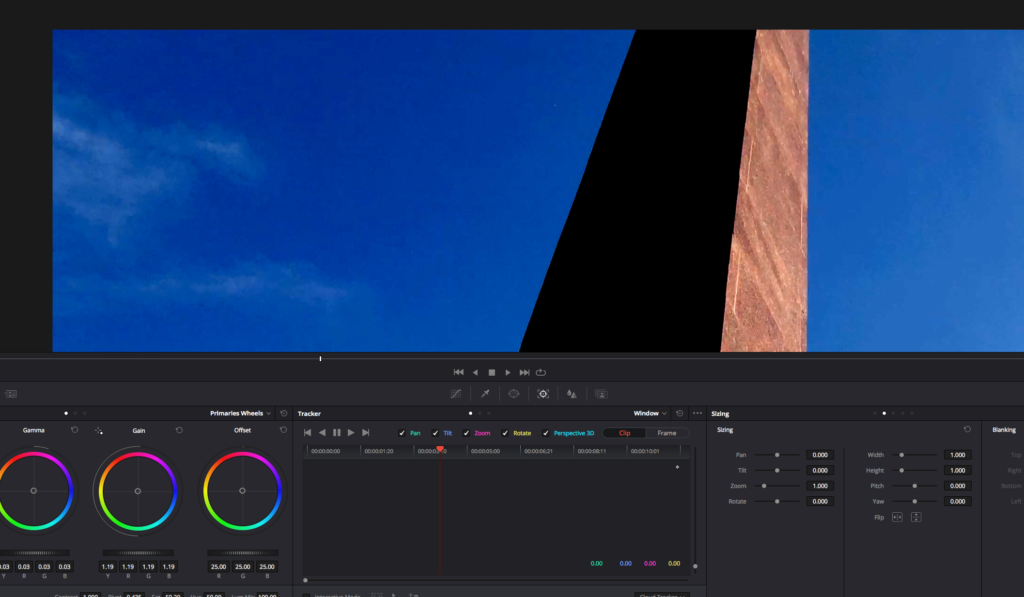

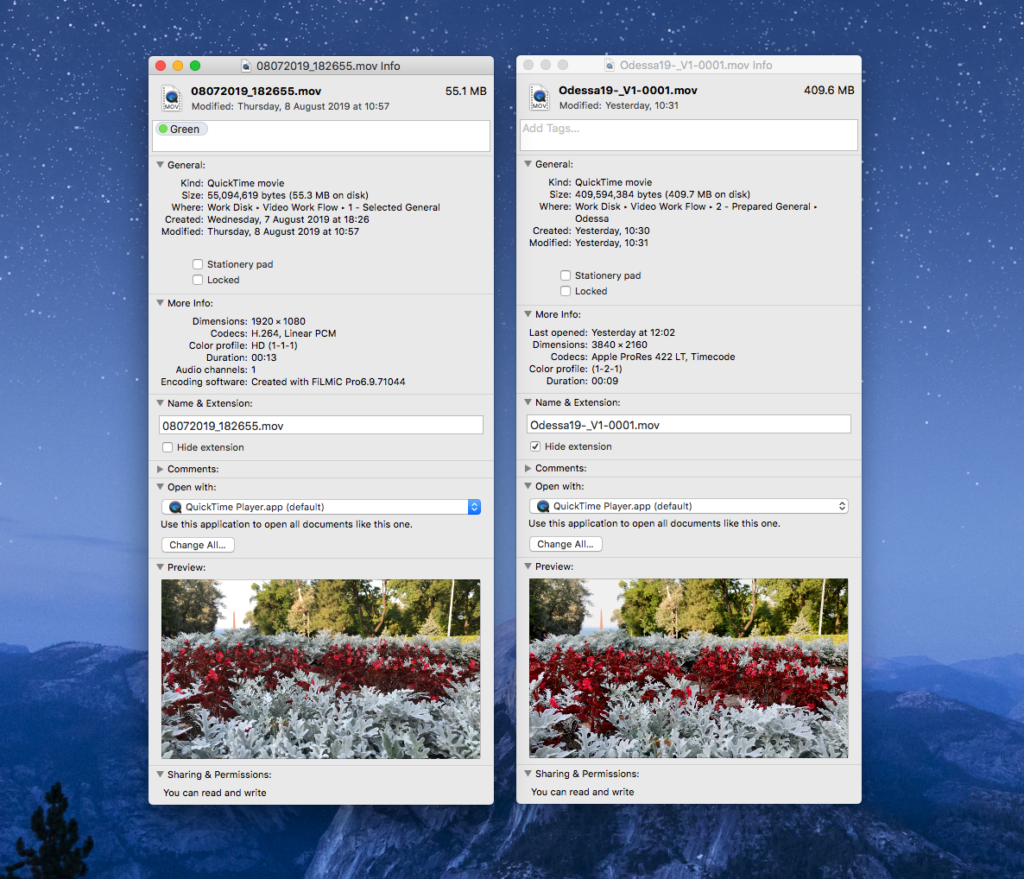

A video codec is similar to JPEG compression number since it defines how much we compress videos. There are three types of codecs that are often used – the camera codec, the editing codec and the delivery codec.

Camera codecs tend to compress the video file as much as possible whilst maintaining quality. This is because the video has to be small enough to be written on memory cards fast and because the processing power on cameras is quite low. Typical camera codecs are H.264 and H.265.

Editing codec files are much larger and come with higher quality. When we transfer footage from our cameras into an editor, it’s best to transcode the footage to an editing codec. This allows the footage to be manipulated in a better way. One of the most common editing codecs is Apple ProRes.

Delivery codecs vary in quality depending on what the end use will be. For online streaming, a low quality codec such as H.264 might be used. For higher end output such as broadcast, much higher quality codecs should be used.

Technical Differences – Stabilization

The other core technical difference is the way we stabilize our cameras. For many stills shots, we can often handhold the camera and match the shutter speed to focal length. In video, however, handholding a camera leads to shaky footage that is very uncomfortable to watch.

When we shoot video, we need some form of stabilization. Let’s look at the options:

- Lens or IBIS – In-built stabilization. Here the optics or the sensor will move to counter the movement of the videographer’s body. It works well on wider lenses and where the shooter is not moving too fast or erratically. Some cameras allow a combination of lens stabilization and IBIS for greater stability.

- Three Axis Gimbals – A gimbal is a handheld device which uses computer-controlled motors and gyroscopes to detect and counter movement in three planes – yaw, row and pitch. They are very effective for controlling most types of motion. However, as they cannot stabilize the up/down plane, they are not so good when the photographer is walking or running with the camera. This can be overcome using a technique called the Ninja Walk.

- Video Tripod – Photographers are familiar with video tripods as they are very similar to photographic tripods. Indeed, photographic tripods can be given a video head. The key difference is that dedicated video tripods have a bowl mount for head. A good video head is fluid and moves only in the pan and tilt planes. However, you can't use it to mount a camera in portrait orientation.

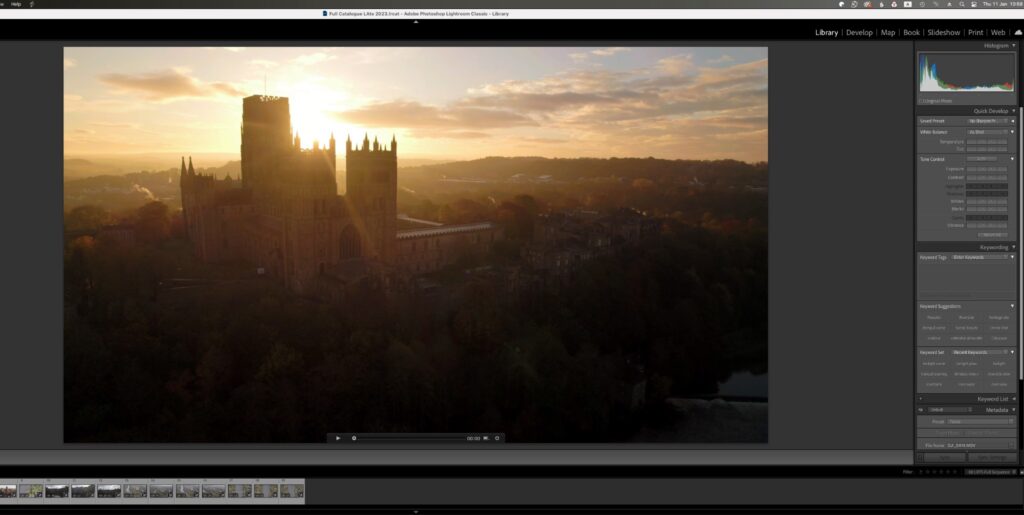

Creative Differences – Motion

The key creative difference between photography and video is how we convey motion. In photography we can choose to freeze it or we can choose to enhance it using a slow shutter speed. In video, motion is an integral part of the shot – without it, we just have a photograph. A 10-second clip of a building is just a photograph unless there is some form of motion in the shot. There are two ways to show motion in video:

- Motion within the scene – In our building example, motion within the scene could be cars or people passing by, clouds over the building or even someone opening a window in that building. We are looking for something or someone to give movement to a static scene.

- Moving the camera – If we cannot find any movement with the scene then we can move the camera instead. In the case of the building, this might be a simple tilt shot from top to bottom using a video tripod and head. We might also use a gimbal to move the camera in the horizontal or vertical plane.

Creative Differences – Composition

Many of the key photographic compositional rules are equally important in video. The most important one is probably the rule of thirds. The main reason for this is the elongated aspect ratio of video compared to stills. The 16:9 ratio is ideally suited for positioning subjects on either left or right third of the frame.

Many other compositional rules work well too. Negative space, leading lines and color contrast are all elements that translate well to video. However, for them to truly work you need to introduce some motion.

Moving the camera and maintaining good composition is one of the trickier aspects of film making.

Creative Differences – Storytelling

When we take a photograph, it is often intended to be a stand alone representation of the scene in front of us. When we shoot a video clip it is generally meant to be one element of a greater story. A story that will be created in post production.

For that reason we need to think more about shots that will work together to create the story. Returning to our building for example, we might start the story with a wide establishing shot of people and cars passing by.

Then we might zoom in on a person walking towards the building. The next shot in the sequence might be a closeup of that person’s hand pushing the door handle, followed by their feet entering the room.

Many cameras today give us exceptional video capabilities. Today we have it in cameras costing $500. However, many photographers still ignore or fear creating videos.They are worried that they lack the required skills.

There's nothing to worry about – if you understand photography, you will understand video. Many technical details such as exposure and focus are very similar. The core differences we have listed above are easy to understand and master.

Next time you are shooting something, try flipping that dial over to movie mode and see what you can create. In an upcoming article we will introduce the basics of video storytelling.

1 Comment

It’s Mbps, Mega , not milli mbps, your out by a factor of 1:10,000,000,000.